Hello Folks! The Manifold team is back again with our twelfth research log. This is our platform for weekly updates on all the progress we are making for next generation Intelligent Systems, and to share other interesting things happening in the broader research community.

NEKO Project

The NEKO Project aims to build the first large scale, Open Source "Generalist" Model, trained on numerous modalities. You can learn more about it here. This week the team has been heads down on various directions related to training our preliminary NEKO implementation on different modalities.

We’ve been making headway on image-text paired data, working on data ingestion for the image captioning objective. We’ll have some more updates in the coming weeks on this, but you can check our in progress implementations over here: https://github.com/ManifoldRG/NEKO

AgentForge Project

The AgentForge project aims to build out generic tools and models to enhance building of Intelligent Agents that are capable of complex actions, including digital tool use. digital tool use. We’re currently engaged in a community wide survey, and have recently performed in depth reviews of papers like Reflexion.

Pulse of AI

A few exciting projects in the research community have caught our eye this week.

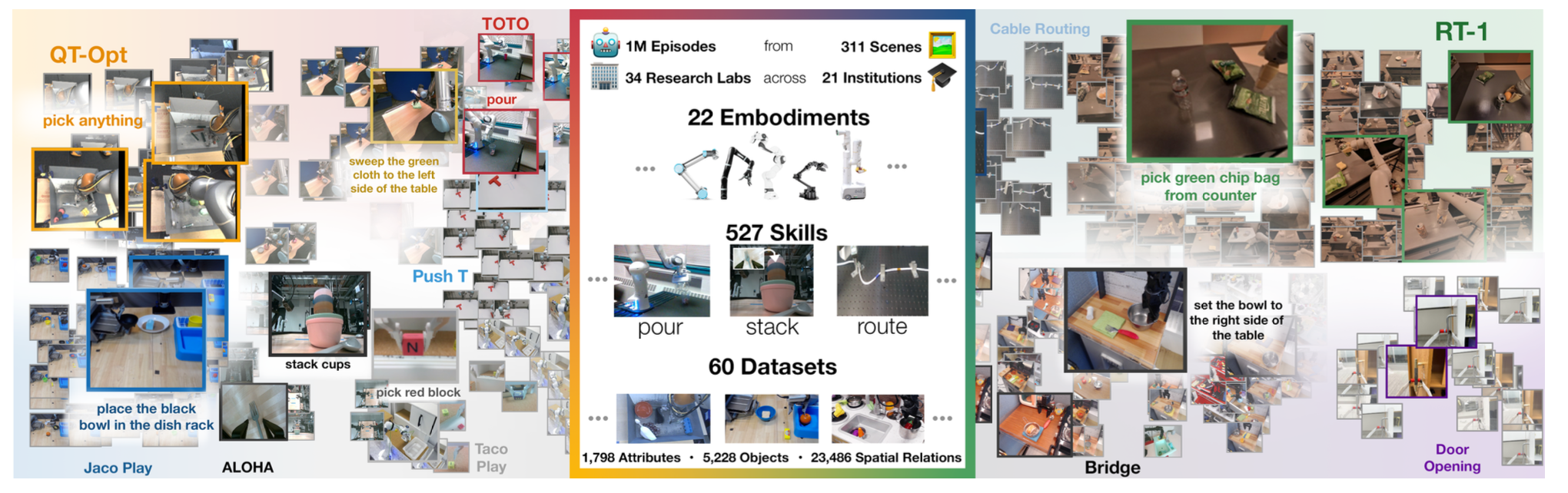

We’re extremely interested in “generalist” models, so were understandably excited with DeepMind’s release of a massive new robotics dataset for training generalist robotics models. Check out the blog post and paper below!

Kosmos-G pushes modality to modality generation, and present a model that trains an aligner network solely using text modality in order to align out space to input space, using CLIP.

Some other interesting studies we have been looking at are below. We highly recommend checking these out.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter, Linkedin, and Mastodon!