Welcome to Research Log #025! We document weekly research progress across the various initiatives in the Manifold Research Group, and highlight breakthroughs from the broader research community we think are interesting in the Pulse of AI!

We’re growing our core team and pursuing new projects. If you’re interested in working together, join the conversation on Discord and check out our Github.

NEKO

The NEKO Project aims to build the first large scale, Open Source "Generalist" Model, trained on numerous modalities including control and robotics tasks. You can learn more about it here.

- Tool usage: The team is working on a big PR, which will refactor the model CLI interface so that it is easier for everyone to use and interact with NEKO. This should make the model more accessible when it is released!

- Datasets: In previous research logs, we have spoken about the v0 dataset and making it available. This week, we have started to make a loading script for the MMC4 dataset so that we can access it simply. Expect more details in the future.

Agent Forge

The AgentForge Project aims to build models, tools, and frameworks that allow anyone to build much more powerful AI agents capable of using tools and interacting with the digital and physical worlds.

This week we have been focusing on working on the Agent Survey, specifically we are discussing the relevance of tools vs memory in LLMs. We are also internally discussing whether we could use methods from other subfields to make these models more helpful.

Pulse of AI

There have been some exciting advancements this week, from an AI that can solve geometry problems at the level of IMO gold medalists to a model that can predict the depth of any image. Read on for this week's Pulse of AI!

AlphaGeometry

XTX launched a competition roughly two months ago, to solve all of the International Mathematics Olympiads (IMO) problems using AI. This competition was called AIMO, and it has a cash prize of 10 million dollars. Since the launch of this competition, several companies have thrown their hat into the ring to try to win.

AlphaGeometry is a theorem prover made by Google DeepMind that is the first big step in this competition. This model is capable of solving problems at the level of the average Gold medalist in the IMO.

The model training dataset was made by sampling random premises from different randomly generated problems. Here, they generate the premises using Random Acyclic Graphs to get to the synthetic problems and answers. They used this method to generate millions of problems, generating a robust training dataset of synthetic problems that basically no one has ever seen.

The model is in the style of GPT and it helps a theorem prover engine to generate the answers. One would expect the model to be huge, but it only has 151 million parameters. If you want to read more about it, the paper can be found here and if you want to run it locally, the source code is public here!

Depth Anything

Researchers at the University of HongKong and Tik Tok released a model that can estimate the depth of a picture and video pretty accurately. They released a new foundational model trained on 1.5 million labeled examples and roughly 62 million unlabeled examples.

They grabbed 6 public datasets containing roughly the 62M unlabeled images and generated depth maps using Monocular Depth Estimation (MDE) models. These MDE models could be thought of as teachers and the student was their Depth Anything model.

The student model was trained on both, the Manual labels that the already existing datasets had and the pseudo labels made by the teacher model. This model is most likely going to be used everywhere from tiktok videos to zoom calls. The code and the weights are open source and are on github and huggingface. If you would like to dig a bit more into the techniques they used, the paper is here.

Asynchronous Local-SGD Training for Language Modeling

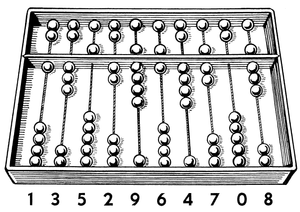

Stochastic Gradient Descent (SGD) is a classical way to train Machine Learning Models. Researchers at Google DeepMind have presented an empirical study of how to train an LLM asynchronously using SGD.

Normally, you have to use a lot of resources to train LLMs, but with Local SGD, several people can train the same model and “meet in the middle” to update the weights. The problem with this method is what is normally called the straggler effect where “faster devices are idle waiting for slower ones to catch up, undermining the overall efficiency of the system”. And another problem with Local-SGD is that you have to wait for the devices to synchronize so that you can update the weights globally.

Figure 5: How Asynchronous Local-SGD works compared against Synchronous Local-SGD. Source: paper.

Asynchronous Local-SGD solves this problem because they can update the weights any time a model finishes one of their steps. Still, ALSGD has problems with momentum of the gradients. To mitigate the problems, they propose two different solutions: Delayed Nesterov momentum update (DN) and Dynamic Local Updates (DyLU). With these two techniques, they get similar results to Local-SGD.

If you want to delve deeper, check their paper out. And if you are interested in this federated style of training, we talked a little bit about it in research log #19 about another federated training method.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter, Linkedin, and Mastodon!